Overview

Background

I’ve been thinking about writing this article for a while, with the premise of “what does IT look like in the future?” In a digital economy, the role of technology in The Intelligent Enterprise will certainly continue to be creating value and competitive business advantage. That being said, one can reasonably assume a few things that are true today for medium to large organizations will continue to be part of that reality as well, namely:

- The technology footprint will be complex and heterogenous in its makeup. To the degree that there is a history of acquisitions, even more so

- Cost will always be a concern, especially to the degree it exceeds value delivered (this is explored in my article on Optimizing the Value of IT)

- Agility will be important in adopting and integrating new capabilities rapidly, especially given the rate of technology advancement only appears to be accelerating over time

- Talent management will be complex given the variety of technologies present will be highly diverse (something I’ve started to address in my Workforce and Sourcing Strategy Overview article)

My hope is to provide some perspective in this article on where I believe things will ultimately move in technology, in the underlying makeup of the footprint itself, how we apply capabilities against it, and how to think about moving from our current reality to that environment. Certainly, all of the five dimensions of what I outlined in my article on Creating Value Through Strategy will continue to apply at an overall strategy level (four of which are referenced in the bullet points above).

A Note on My Selfish Bias…

Before diving further into the topic at hand, I want to acknowledge that I am coming from a place where I love software development and the process surrounding it. I taught myself to program in the third grade (in Apple Basic), got my degree in Computer Science, started as a software engineer, and taught myself Java and .Net for fun years after I stopped writing code as part of my “day job”. I love the creative process for conceptualizing a problem, taking a blank sheet of paper (or white board), designing a solution, pulling up a keyboard, putting on some loud music, shutting out distractions, and ultimately having technology that solves that problem. It is a very fun and rewarding thing to explore those boundaries of what’s possible and balance the creative aspects of conceptual design with the practical realities and physical constraints of technology development.

All that being said, insofar as this article is concerned, when we conceptualize the future of IT, I wanted to put a foundational position statement forward to frame where I’m going from here, which is:

Just because something is cool and I can do it, doesn’t mean I should.

That is a very difficult thing to internalize for those of us who live and breathe technology professionally. Pride of authorship is a real thing and, if we’re to embrace the possibilities of a more capable future, we need to apply our energies in the right way to maximize the value we want to create in what we do.

The Producer/Consumer Model

Where the Challenge Exists Today

The fundamental problem I see in technology as a whole today (I realize I’m generalizing here) is that we tend to want to be good at everything, build too much, customize more than we should, and throw caution to the wind when it comes to things like standards and governance as inconveniences that slow us down in the “deliver now” environment in which we generally operate (see my article Fast and Cheap, Isn’t Good for more on this point).

Where that leaves us is bloated, heavy, expensive, and slow… and it’s not good. For all of our good intentions, IT doesn’t always have the best reputation for understanding, articulating, or delivering value in business terms and, in quite a lot of situations I’ve seen over the years, our delivery story can be marred with issues that don’t create a lot of confidence when the next big idea comes along and we want to capitalize on the opportunity it presents.

I’m being relatively negative on purpose here, but the point is to start with the humility of acknowledging the situation that exists in a lot of medium to large IT environments, because charting a path to the future requires a willingness to accept that reality and to create sustainable change in its place. The good news, from my experience, is there is one thing going for most IT organizations I’ve seen that can be a critical element in pivoting to where we need to be: a strong sense of ownership. That ownership may show up as frustration in the status quo depending on the organization itself, but I’ve rarely seen an IT environment where the practitioners themselves don’t feel ownership for the solutions they build, maintain, and operate or have a latent desire to make them better. There may be a lack of a strategy or commitment to change in many organizations, but the underlying potential to improve is there, and that’s a very good thing if capitalized upon.

Challenging the Status Quo

Pivoting to the future state has to start with a few critical questions:

- Where does IT create value for the organization?

- Which of those capabilities are available through commercially available solutions?

- To what degree are “differentiated” capabilities or features truly creating value? Are they exceptions or the norm?

Using an example from the past, a delivery team was charged with solving a set of business problems that they routinely addressed through custom solutions, even though the same capabilities could be accomplished through integration of one or more commercially available technologies. From an internal standpoint, the team promoted the idea that they had a rapid delivery process, were highly responsive to the business needs they were meant to address, etc. The problem is that the custom approach actually cost more money to develop, maintain, and support, was considerably more difficult to scale. Given solutions were also continually developed with a lack of standards, their ability to adopt or integrate any new technologies available on the market was non-existent. Those situations inevitably led to new custom solutions and the costs of ownership skyrocketed over time.

This situation begs the question: if it’s possible to deliver equivalent business capability without building anything “in house”, why not do just that?

In the proverbial “buy versus build” argument, these are the reasons I believe it is valid to ultimately build a solution:

- There is nothing commercially available that provides the capability at a reasonable cost

- I’m referencing cost here, but it’s critical to understand the TCO implications of building and maintaining a solution over time. They are very often underestimated.

- There is a commercially available solution that can provide the capability, but something about privacy, IP, confidentiality, security, or compliance-related concerns makes that solution infeasible in a way that contractual terms can’t address

- I mention contracting purposefully here, because I’ve seen viable solutions eliminated from consideration over a lack of willingness to contract effectively, and that seems suboptimal by comparison with the cost of building alternative solutions instead

Ultimately, we create value in business capability enabled through technology, “who” built them doesn’t matter.

Rethinking the Model

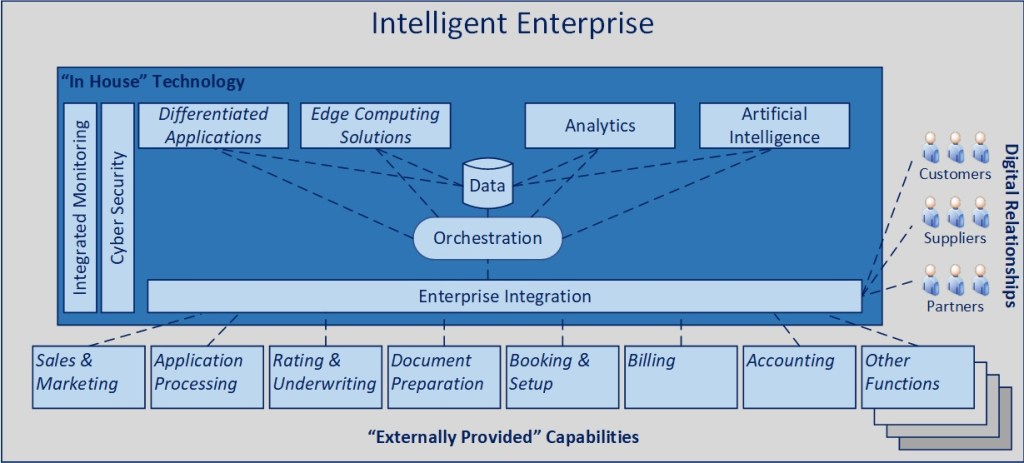

My assertion is that we will obtain the most value and acceleration of business capabilities when we shift towards a producer/consumer model in technology as a whole.

What that suggests is that “corporate IT” largely adopts the mindset of the consumer of technologies (specifically services or components) developed by producers focused purely on building configurable, leverageable components that can be integrated in compelling ways into a connected ecosystem (or enterprise) of the future.

What corporate IT “produces” should be limited to differentiated capabilities that are not commercially available, and a limited set of foundational capabilities that will be outlined below. By trying to produce less and thinking more as a consumer, this should shift the focus internally towards how technology can more effectively enable business capability and innovation and externally towards understanding, evaluating, and selecting from the best-of-breed capabilities in the market that help deliver on those business needs.

The implication, of course for those focused on custom development, would be to move towards those differentiated capabilities or entirely towards the producer side (in a product-focused environment), which honestly could be more satisfying than corporate IT can be for those with a strong development inclination.

The cumulative effect of these adjustments should lead to an influx of talent into the product community, an associated expansion of available advanced capabilities in the market, and an accelerated ability to eventually adopt and integrate those components in the corporate environment (assuming the right infrastructure is then in place), creating more business value than is currently possible where everyone tries to do too much and sub-optimizes their collective potential.

Learning from the Evolution of Infrastructure

The Infrastructure Journey

You don’t need to look very far back in time to remember when the role of a CTO was largely focused on managing data centers and infrastructure in an internally hosted environment. Along the way, third parties emerged to provide hosting services and alleviate the need to be concerned with routine maintenance, patching, and upgrades. Then converged infrastructure and the software-defined data center provided opportunities to consolidate and optimize that footprint and manage cost more effectively. With the rapid evolution of public and private cloud offerings, the arguments for managing much of your own infrastructure beyond those related specifically to compliance or legal concerns are very limited and the trajectory of edge computing environments is still evolving fairly rapidly as specialized computing resources and appliances are developed. The learning being: it’s not what you manage in house that matters, it’s the services you provide relative to security, availability, scalability, and performance.

Ok, so what happens when we apply this conceptual model to data and applications? What if we were to become a consumer of services in these domains as well? The good news is that this journey is already underway, the question is how far we should take things in the interest of optimizing the value of IT within an organization.

The Path for Data and Analytics

In the case of data, I think about this area in two primary dimensions:

- How we store, manage, and expose data

- How we apply capabilities to that data and consume it

In terms of storage, the shift from hosted data to cloud-based solutions is already underway in many organizations. The key levers continue to be ensuring data quality and governance, finding ways to minimize data movement and optimize data sharing (while facilitating near real-time analytics), and establishing means to expose data in standard ways (e.g., virtualization) that enable downstream analytic capabilities and consumption methods to scale and work consistently across an enterprise. Certainly, the cost of ingress and egress of data across environments is a key consideration, especially where SaaS/PaaS solutions are concerned. Another opportunity continues to be the money wasted on building data lakes (beyond archival and unstructured data needs) when viable platform solutions in that space are available. From my perspective, the less time and resources spent on moving and storing data to no business benefit, the more energy that can be applied to exposing, analyzing, and consuming that data in ways that create actual value. Simply said, we don’t create value in how or where we store data, we create value in how consume it.

On the consumption side, having a standards-based environment with a consistent method for exposing data and enabling integration will lend itself well to tapping into the ever-expanding range of analytical tools on the market, as well as swapping out one technology for another as those tools continue to evolve and advance in their capabilities over time. The other major pivot being to minimize the amount of “traditional” analytical reporting and business intelligence solutions to more dynamic data apps that leverage AI to inform meaningful end-user actions, whether that’s for internal or external users of systems. Compliance-related needs aside, at an overall level, the primary goal of analytics should be informed action, not administrivia.

The Shift In Applications

The challenge in the applications environment is arbitrating the balance between monolithic (“all in”) solutions, like ERPs, and a fully distributed component-based environment that requires potentially significant management and coordination from an IT standpoint.

Conceptually, for smaller organizations, where the core applications (like an ERP suite + CRM solution) represent the majority of the overall footprint and there aren’t a significant number of specialized applications that must interoperate with them, it likely would be appropriate and effective to standardize based on those solutions, their data model, and integration technologies.

On the other hand, the more diverse and complex the underlying footprint is for a medium- to large-size organization, there is value in looking at ways to decompose these relatively monolithic environments to provide interoperability across solutions, enable rapid integration of new capabilities into a best-of-breed ecosystem, and facilitate analytics that span multiple platforms in ways that would be difficult, costly, or impossible to do within any one or two given solutions. What that translates to, in my mind, is an eventual decline of the monolithic ERP-centric environment to more of a service-driven ecosystem where individually configured capabilities are orchestrated through data and integration standards with components provided by various producers in the market. That doesn’t necessarily align to the product strategies of individual companies trying to grow through complementary vertical or horizontal solutions, but I would argue those products should create value at an individual component level and be configurable such that swapping out one component of a larger ecosystem should still be feasible without having to abandon the other products in that application suite (that may individually be best-of-breed) as well.

Whether shifting from a highly insourced to a highly outsourced/consumption-based model for data and applications will be feasible remains to be seen, but there was certainly a time not that long ago when hosting a substantial portion of an organization’s infrastructure footprint in the public cloud was a cultural challenge. Moving up the technology stack from the infrastructure layer to data and applications seems like a logical extension of that mindset, placing emphasis on capabilities provided and value delivered versus assets created over time.

Defining Critical Capabilities

Own Only What is Essential

Making an argument to shift to a consumption-oriented mindset in technology doesn’t mean there isn’t value in “owning” anything, rather it’s meant to be a call to evaluate and challenge assumptions related to where IT creates differentiated value and to apply our energies towards those things. What can be leveraged, configured, and orchestrated, I would buy and use. What should be built? Capabilities that are truly unique, create competitive advantage, can’t be sourced in the market overall, and that create a unified experience for end users. On the final point, I believe that shifting to a disaggregated applications environment could create complexity for end users in navigating end-to-end processes in intuitive ways, especially to the degree that data apps and integrated intelligence becomes a common way of working. To that end, building end user experiences that can leverage underlying capabilities provided by third parties feels like a thoughtful balance between a largely outsourced application environment and a highly effective and productive individual consumer of technology.

Recognize Orchestration is King

Workflow and business process management is not a new concept in the integration space, but it’s been elusive (in my experience) for many years for a number of reasons. What is clear at this point is that, with the rapid expansion in technology capabilities continuing to hit the market, our ability to synthesize a connected ecosystem that blends these unique technologies with existing core systems is critical. The more we can do this in consistent ways, the more we shift towards a configurable and dynamic environment that is framework-driven, the more business flexibility and agility we will provide… and that translates to innovation and competitive advantage over time. Orchestration is a critical piece of deciding which processes are critical enough that they shouldn’t be relegated to the internal workings of a platform solution or ERP, but taken in-house, mapped out, and coordinated with the intention of creating differentiated value that can be measured, evaluated, and optimized over time. Clearly the scalability and performance of this component is critical, especially to the degree there is a significant amount of activity being managed through this infrastructure, but I believe the transparency, agility, and control afforded in this kind of environment would greatly outweigh the complexity involved in its implementation.

Put Integration in the Center

In a service-driven environment, clearly the infrastructure for integration, streaming in particular, along with enabling a publish and subscribe model for event-driven processing, will be critical for high-priority enterprise transactions. The challenge in integration conversations in my experience tends to be defining the transactions that “matter”, in terms of facilitating interoperability and reuse, and those that are suitable for point-to-point, one off connections. There is ultimately a cost for reuse when you try to scale, and there is discipline needed to arbitrate those decisions to ensure they are appropriate to business needs.

Reassess Your Applications/Services

With any medium to large organization, there is likely technology sprawl to be addressed, particularly if there is a material level of custom development (because component boundaries likely won’t be well architected) and acquired technology (because of the duplication it can cause in solutions and instances of solutions) in the landscape. Another complicating factor could be the diversity of technologies and architectures in place, depending on whether or not a disciplined modernization effort exists, the level of architecture governance in place, and rate and means by which new technologies are introduced into the environment. All of these factors call for a thoughtful portfolio strategy, to identify critical business capabilities and ensure the technology solutions meant to enable them are modern, configurable, rationalized, and integrated effectively from an enterprise perspective.

Leverage Data and Insights, Then Optimize

With analytics and insights being a critical capability to differentiated business performance, an effective data governance program with business stewardship, selecting the right core, standard data sets to enable purposeful, actionable analytics, and process performance data associated with orchestrated workflows are critical components of any future IT infrastructure. This is not all data, it’s the subset that creates significant business value to justify the investment in making it actionable. As process performance data is gathered through the orchestration approach, analytics can be performed to look for opportunities to evolve processes, configurations, rules, and other characteristics of the environment based on key business metrics to improve performance over time.

Monitor and Manage

With the expansion of technologies and components, internal and external to the enterprise environment, having the ability to monitor and detect issues, proactively take action, and mitigate performance, security, or availability issues will become increasingly important. Today’s tools are too fragmented and siloed to achieve the level of holistic understanding that is needed between hosted and cloud-based environments, including internal and external security threats in the process.

Secure “Everything”

While zero trust and vulnerability management risk is expanding at a rate that exceeds an organization’s ability to mitigate it, treating security as a fundamental requirement of current and future IT environments is a given. The development of a purposeful cyber strategy, prioritizing areas for tooling and governance effectively, and continuing to evolve and adapt that infrastructure will be core to the DNA of operating successfully in any organization. Security is not a nice to have, it’s a requirement.

The Role of Standards and Governance

What makes the framework-driven environment of the future work is ultimately having meaningful standards and governance, particularly for data and integration, but extending into application and data architecture, along with how those environments are constructed and layered to facilitate evolution and change over time. Excellence takes discipline and, while that may require some additional investment in cost and time during the initial and ongoing stages of delivery, it will easily pay itself off in business agility, operating cost/ cost of ownership, and risk/exposure to cyber incidents over time.

The Lending Example

Having spent time a number of years ago understanding and developing strategy in the consumer lending domain, the similarities in process between direct and indirect lending, prime and specialty / sub-prime, from simple products like credit card to more complex ones like mortgage is difficult to ignore. That being said, it isn’t unusual for systems to exist in a fairly siloed manner, from application to booking, from document preparation, into the servicing process itself.

What’s interesting, from my perspective, is where the differentiation actually exists across these product sets: in the rules and workflow being applied across them, while the underlying functions themselves are relatively the same. As an example, one thing that differentiates a lender is their risk management policy, not necessarily the tool they use to assess to implement their underwriting rules or scoring models per se. Similarly, whether pulling a credit score is part of the front end of the process in something like credit card and an intermediate step in education lending, having a configurable workflow engine could enable origination across a diverse product set with essentially the same back-end capabilities and likely at a lower operating cost.

So why does it matter? Well, to the degree that the focus shifts from developing core components that implement relatively commoditized capability to the rules and processes that enable various products to be delivered to end consumers, the speed with which products can be developed, enhanced, modified, and deployed should be significantly improved.

Ok, Sounds Great, But Now What?

It Starts with Culture

At the end of the day, even the best designed solutions come down to culture. As I mentioned above, excellence takes discipline and, at times, patience and thoughtfulness that seems to contradict the speed with which we want to operate from a technology (and business) standpoint. That being said, given the challenges that ultimately arise when you operate without the right standards, discipline, and governance, the outcome is well worth the associated investments. This is why I placed courageous leadership as the first pillar in the five dimensions outlined in my article on Excellence by Design. Leadership is critical and, without it, everything else becomes much more difficult to accomplish.

Exploring the Right Operating Model

Once a strategy is established to define the desired future state and a culture to promote change and evolution is in place, looking at how to organize around managing that change is worth consideration. I don’t necessarily believe in “all in” operating approaches, whether it is a plan/build/run, product-based orientation, or some other relatively established model. I do believe that, given leadership and adaptability are critically needed for transformational change, looking at how the organization is aligned to maintaining and operating the legacy environment versus enabling establishment and transition to the future environment is something to explore. As an example, rather than assuming a pure product-based orientation, which could mushroom into a bloated organization design where not all leaders are well suited to manage change effectively, I’d consider organizing around a defined set of “transformation teams” that operate in a product-oriented/iterative model, but basically take on the scope of pieces of the technology environment, re-orient, optimize, modernize, and align them to the future operating model, then transition those working assets to different leaders that maintain or manage those solutions in the interest of moving to the next set of transformation targets. This should be done in concert with looking for ways to establish “common components” teams (where infrastructure like cloud platform enablement can be a component as well) that are driven to produce core, reusable services or assets that can be consumed in the interest of ultimately accelerating delivery and enabling wider adoption of the future operating model for IT.

Managing Transition

One of the consistent challenges with any kind of transformative change is moving between what is likely a very diverse, heterogenous environment to one that is standards-based, governed, and relatively optimized. While it’s tempting to take on too much scope and ultimately undermine the aspirations of change, I believe there is a balance to be struck in defining and establishing some core delivery capabilities that are part of the future infrastructure, but incrementally migrating individual capabilities into that future environment over time. This is another case where disciplined operations and disciplined delivery come into play so that changes are delivered consistently but also in a way that is sustainable and consistent with the desired future state.

Wrapping Up

While a certain level of evolution is guaranteed as part of working in technology, the primary question is whether we will define and shape that future or be continually reacting and responding to it. My belief is that we can, through a level of thoughtful planning and strategy, influence and shape the future environment to be one that enables rapid evolution as well as accelerated integration of best-of-breed capabilities at a pace and scale that is difficult to deliver today. Whether we’ll truly move to a full producer/consumer type environment that is service based, standardized, governed, orchestrated, fully secured, and optimized is unlikely, but falling short of excellence as an aspiration would still leave us in a considerably better place than where we are today… and it’s a journey worth making in my opinion.

I hope the ideas were worth considering. Thanks for spending the time to read them. Feedback is welcome as always.

-CJG 03/08/2024