A compelling vision will stand the test of time, but it will also evolve to meet the needs of the day.

There are inherent challenges with developing strategy for multiple reasons:

- It is difficult to balance the long- and short-term goals of an organization

- It generally takes significant investments to accomplish material outcomes

- The larger the change, the more difficult it can be to align people to the goals

- The time it takes to mobilize and execute can undermine the effort

- The discipline needed to execute at scale requires a level of experience not always available

- Seeking “perfection” can be counterproductive by comparison with aiming for “good enough”

The factors above, and other too numerous to list, should serve as reminders that excellence, speed, and agility require planning and discipline, they don’t happen by accident. The focus of this article will be to break down a few aspects of transitioning to an integrated future state in the interest of increasing the probability of both achieving predictable outcomes, but also in getting there quickly and in a cost-effective way, with quality. That’s a fairly tall order, but if we don’t shoot for excellence, we are eventually choosing obsolescence.

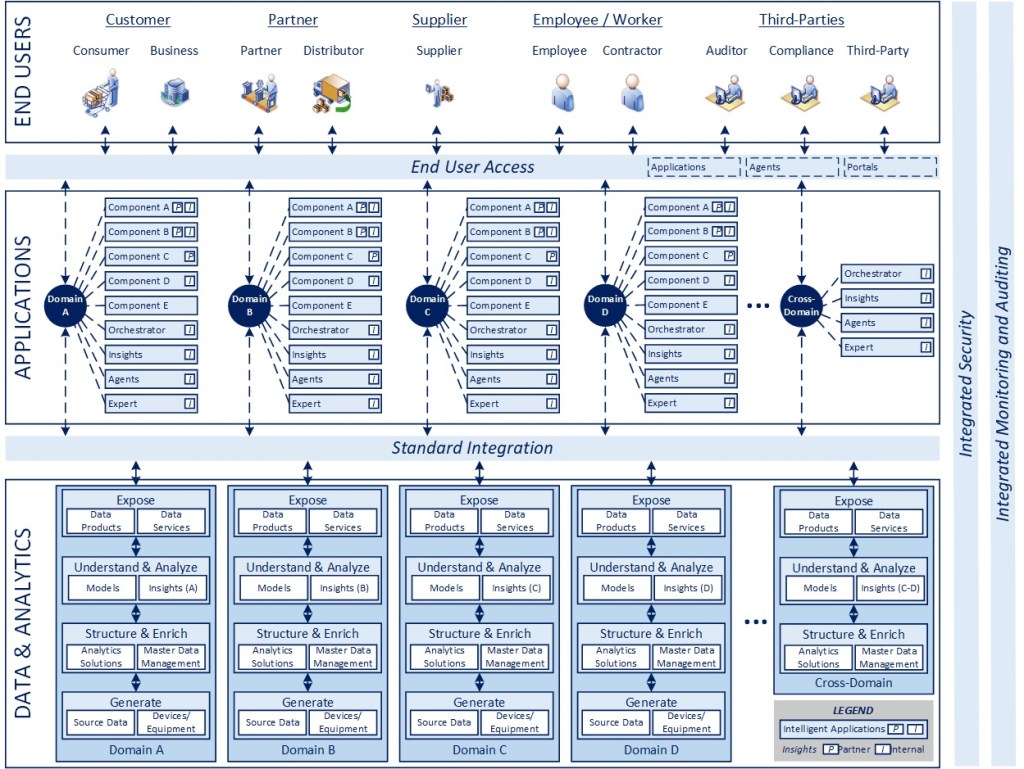

This is the sixth post in a series focused on where I believe technology is heading, with an eye towards a more harmonious integration and synthesis of applications, AI, and data… what I previously referred to in March of 2022 as “The Intelligent Enterprise”. The sooner we begin to operate off a unified view of how to align, integrate, and leverage these oftentimes disjointed capabilities today, the faster an organization will leapfrog others in their ability to drive sustainable productivity, profitability, and competitive advantage.

Key Considerations

Create the Template for Delivery

My assumption is that we care about three things in transitioning to a future, integrated environment:

- Rapid, predictable delivery of capabilities over time (speed-to-market, competitive advantage)

- Optimized costs (minimal waste, value disproportionate to expense)

- Seamless integration of new capabilities as they emerge (ease of use and optimized value)

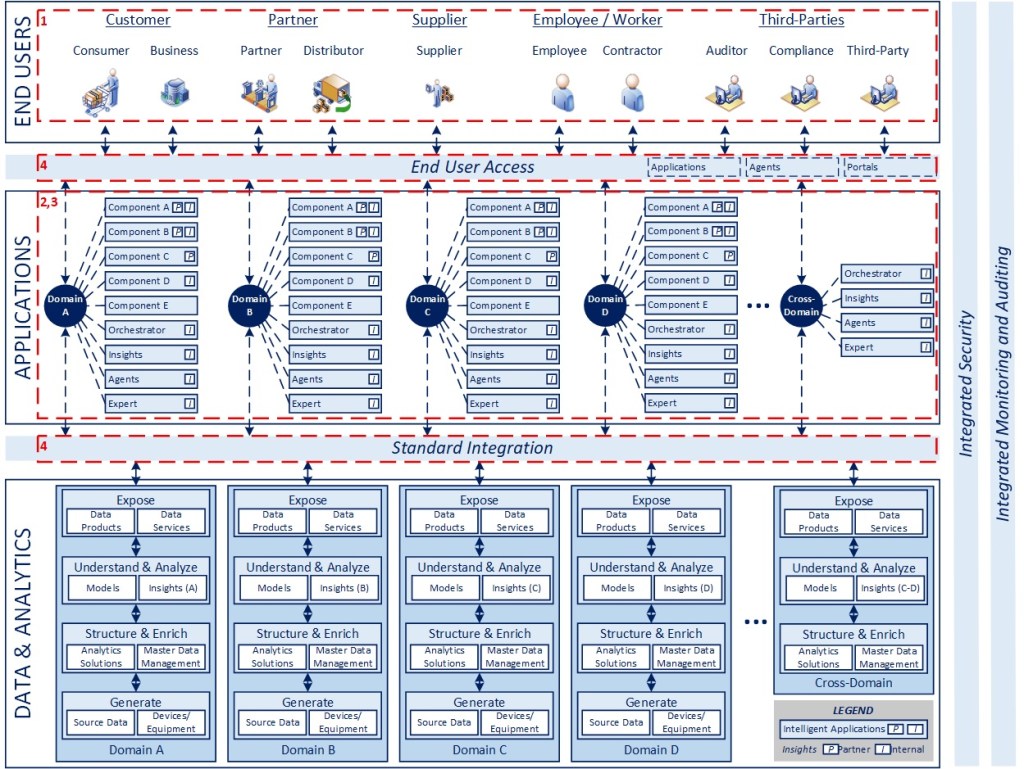

What these three things imply is the need for a consistent, repeatable approach and a standard architecture towards which we migrate capabilities over time. Articles 2-5 of this series explore various dimensions of that conceptual architecture from an enterprise standpoint, the purpose here will be to focus in on approach.

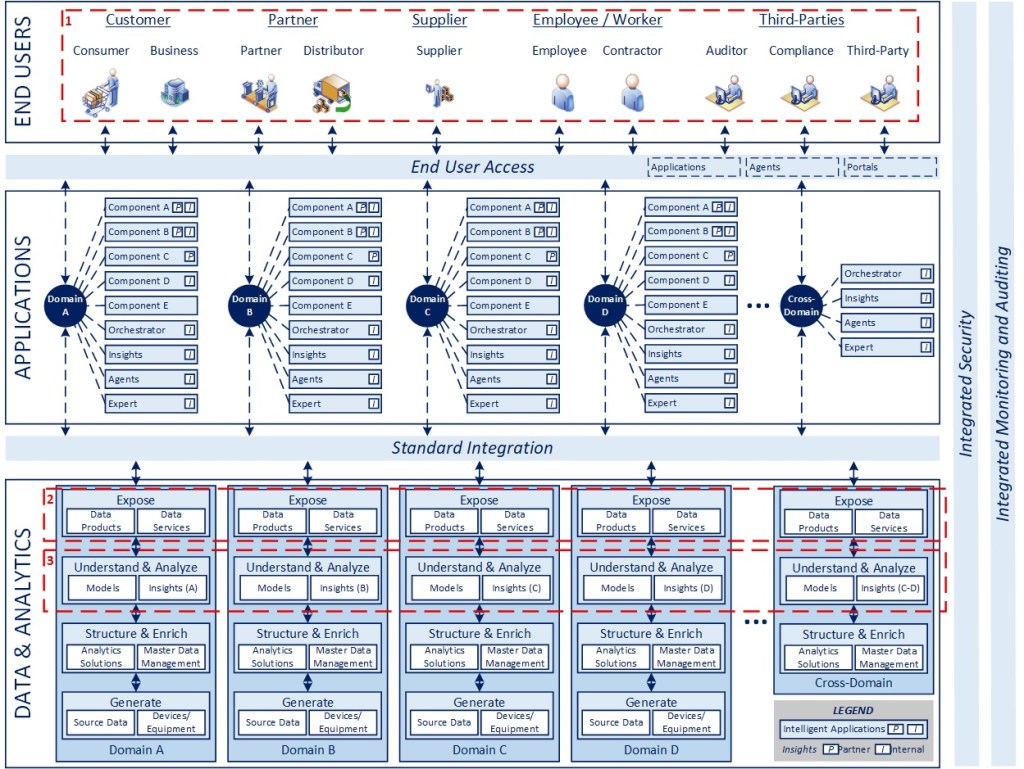

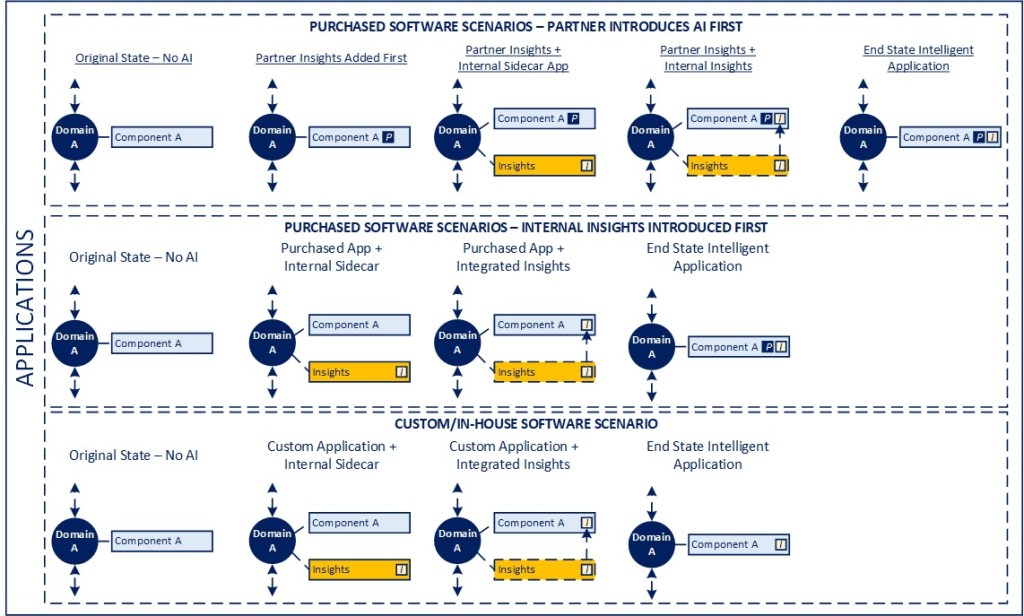

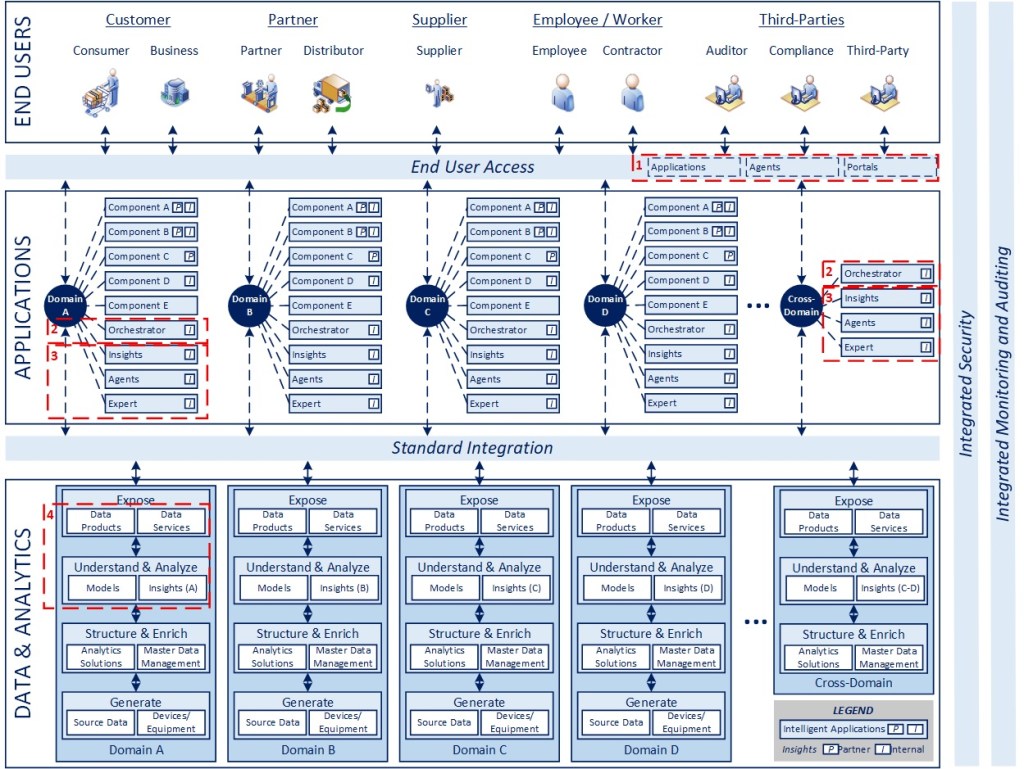

As was discussed in the article on the overall framework, the key is to start the design of the future environment around the various consumers of technology, the capabilities being provided to them, now and in the future, and prioritizing the relative value of those capabilities over time. That should be done on an internal and external level, so that individual roadmaps can be developed by domain to eventually surface and provide those capabilities as part of a portfolio management process. In the course of identifying those capabilities, some key questions will be whether those capabilities are available today, involve some level of AI services, whether those can/should be provided through a third-party or internally, and whether the data ultimately exists to enable them. This is where a significant inflection point should occur: the delivery of those capabilities should follow a consistent approach and pattern, so it can be repeated, leveraged, and made more efficient over time.

For instance, if an internally-developed AI-enabled capability is needed, the way that the data is exposed, processed, the AI service (or data product) exposed, and integrated/consumed by a package or custom application should be exactly the same from a design standpoint, regardless of what the specific capability is. That isn’t to say that the work needs to be done by only one team, as will be explored in the final article on IT organizational implications, but rather that we ideally want to determine the best “known path” to delivery, execute that repeatedly, and evolve it as required over time.

Taking this approach should provide a consistent end-user experience of services as they are brought online over time, a relatively streamlined delivery process as you are essentially mass-producing capabilities over time, and a relatively cost-optimized environment as you are eliminating the bloat and waste of operating multiple delivery silos that will eventually impede speed-to-market at scale and lead to technical debt.

From an architecture standpoint, without wanting to go too deep into the mechanics here, to the extent that the current state and future state enterprise architecture models are different, it would be worth evaluating things like virtualization of data as well as adapters/facades in the integration layer as a way to translate between the current and future models so there is logical consistency in the solution architecture even where the underlying physical implementations are varied. Our goal in enterprise architecture should always be to facilitate rapid execution, but promote standards, simplification, and reduce complexity and technical debt wherever possible over time.

Govern Standards and Quality

With a templatized delivery model and target architecture in place, the next key aspect to transition is to both govern the delivery of new capabilities to identify opportunities to develop and evolve standards, as well as to evolve the “template” itself, whether that involves adding automation to the delivery, building reusable frameworks or components that can be leveraged, or other assets that can help reduce friction and ease future efforts.

Once new capabilities are coming online, the other key aspect is to review them for quality and performance, to also look for ways to evolve the approach, adjust the architecture, and continue to refine the understanding of how these integrated capabilities can best be delivered and leveraged on an end-to-end basis.

Again, the overall premise of this entire series of articles is to chart a path towards an integrated enterprise environment for applications, data, and AI in the future. To be repeatable, we need to be consistent in how we plan, execute, govern, and evolve in the delivery of capabilities over time.

Certainly, there will be learnings that come from delivery, especially early in the adoption and integration of these capabilities. The way that we establish and enable the governance and evolution stemming from what we learn is critical in making delivery more predictable and ensuring more useful capabilities and insights over time.

Evolve in a Thoughtful Manner

With our learnings firmly in hand, the mental model I believe would be worth considering is more of a scaled Agile-type approach, where we have “increment-level”/monthly scheduled retrospectives to review the learnings across multiple sprints/iterations (ideally across multiple product/domains) and to identify opportunities to adjust estimation metrics, design patterns, develop core/reusable services, and anything else that essentially would improve efficiency, cost, quality, or predictability.

Whether these occur monthly at the outset and eventually space out to a quarterly process as the environment and standards are better defined could depend on a number of factors, but the point is not to make it too reactive to any individual implementation and to try and look across a set of deliveries to look for consistent issues, patterns, and opportunities that will have a disproportionate impact on the environment as a whole.

The other key aspect to remember in relation to evolution is that the capabilities of AI in particular are evolving very rapidly at this point, which is also a reason for thinking about the overall architecture, separation of concerns, and standards for how you integrate in a very deliberate way. A new version of a tool or technology shouldn’t require us to have to rewrite a significant portion of our footprint, it ideally should be a matter of upgrading the previous version and having the new capabilities available everywhere that service is consumed or in swapping out one technology for another, but everything on the other side of an API remaining nearly unchanged to the extent possible. This is understood as a fairly “perfect world” description of what likely would happen in practice, depending on the nature of the underlying change, but the point is that, without allowing for these changes up front in the design, the level of disruption they cause will likely be amplified and slow overall progress.

Summing Up

Change is a constant in technology. It’s a part of life given that things continue to evolve and improve, and that’s a good thing to the extent that it provides us with the ability to solve more complex problems, create more value, and respond more effectively to business needs as they evolve over time. The challenge we face is in being disciplined enough to think through the approach so that we can become effective, fast, and repeatable. Delivering individual projects isn’t the goal, it’s delivering capabilities rapidly and repeatably, at scale, with quality. That’s an exercise in disciplined delivery.

Having now covered the technology and delivery-oriented aspects of the future state concept, the remaining article will focus on how to think about the organizational implications on IT.

Up Next: IT Organizational Implications

I hope the ideas were worth considering. Thanks for spending the time to read them. Feedback is welcome as always.

-CJG 08/15/2025