No one builds applications or uses new technology with the intention of making things worse… and yet we have and still do at times.

Why does this occur, time and again, with technology? The latest thing was supposed to “disrupt” or “transform” everything. I read something that suggested this was it. The thing we needed to do, that a large percentage of companies were planning, that was going to represent $Y billions of spending two years from now, generating disproportionate efficiency, profitability, and so on. Two years later (if that), there was something else being discussed, a considerable number of “learnings” from the previous exercise, but the focus was no longer the same… whether that was windows applications and client/server computing, the internet, enterprise middleware, CRM, Big Data, data lakes, SaaS, PaaS, microservices, mobile applications, converged infrastructure, public cloud… the list is quite long and I’m not sure that productivity and the value/cost equation for technology investments is any better in many cases.

The belief that technology can have such a major impact and the degree of continual change involved have always made the work challenging, inspiring, and fun. That being said, the tendency to rush into the next advance without forming a thoughtful strategy or being deliberate about execution can be perilous in what it often leaves behind, which is generally frustration for the end users/consumers of those solutions and more unrealized benefits and technical debt for an organization. We have to do better with AI, bringing intelligence into the way we work, not treating it as something separate entirely. That’s when we will realize the full potential of the capabilities these technologies provide. In the case of an application portfolio, this is about our evolution to a suite of intelligent applications that fit into the connected ecosystem framework I described earlier in the series.

This is the fourth post in a series focused on where I believe technology is heading, with an eye towards a more harmonious integration and synthesis of applications, AI, and data… what I previously referred to in March of 2022 as “The Intelligent Enterprise”. The sooner we begin to operate off a unified view of how to align, integrate, and leverage these oftentimes disjointed capabilities today, the faster an organization will leapfrog others in their ability to drive sustainable productivity, profitability, and competitive advantage.

Design Dimensions

In line with the blueprint above, articles 2-5 highlight key dimensions of the model in the interest of clarifying various aspects of the conceptual design. I am not planning to delve into specific packages or technologies that can be used to implement these concepts as the best way to do something always evolves in technology, while design patterns tend to last. The highlighted areas and associated numbers on the diagram correspond to the dimensions described below.

Before exploring various scenarios for how we will evolve the application landscape, it’s important to note a couple overall assumptions:

- End user needs and understanding should come first, then the capabilities

- Not every application needs to evolve. There should be a benefit to doing so

- I believe the vast majority of product/platform providers will eventually provide AI capabilities

- Providing application services doesn’t mean I have to have a “front-end” in the future

- Governance is critical, especially to the extent that citizen development is encouraged

- If we’re not mindful how many AI apps we deploy, we will cause confusion and productivity loss because of the fragmented experience

Purchased Software (1)

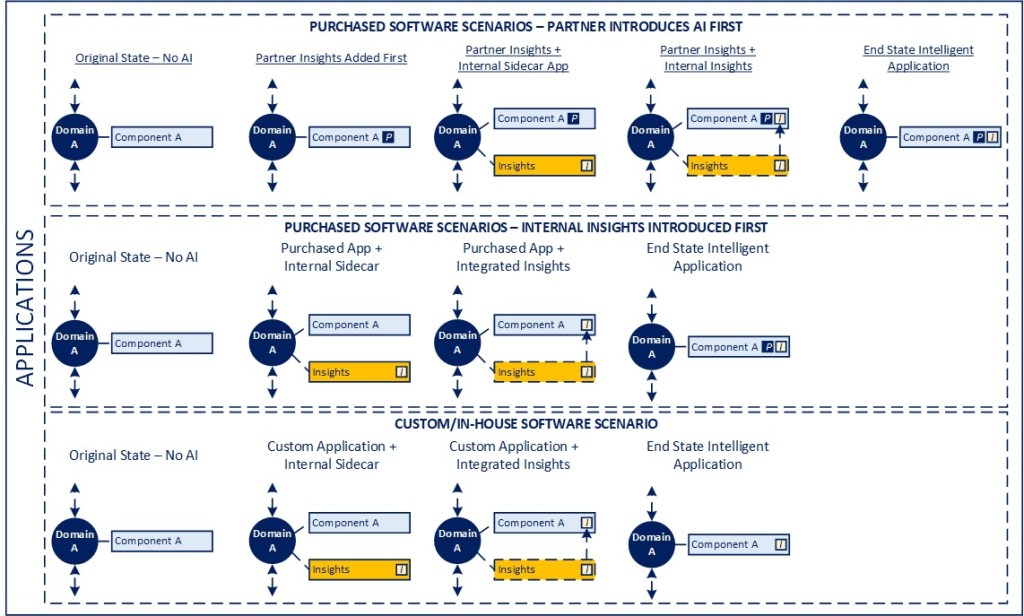

The diagram below highlights a few different scenarios for how I believe intelligence will find its way into applications.

In the case of purchased applications, between the market buzz and continuing desire for differentiation, it is extremely likely that a large share of purchased software products and platforms will have some level of “AI” included in the future, whether that is an AI/ML capability leveraging OLTP data that lives within its ecosystem, or something more causal and advanced in nature.

I believe it is important to delineate between internally generated insights and ones coming as part of a package for several reasons. First, we may not always want to include proprietary data on purchased solutions, especially to the degree they are hosted in the public cloud and we don’t want to expose our internal data to that environment from a security, privacy, or compliance standpoint. Second, we may not want to expose the rules and IP associated with our decisioning and specific business processes to the solution provider. Third, to the degree we maintain these as separate things, we create flexibility to potentially migrate to a different platform more easily than if we are tightly woven into a specific package. And, finally, the required data ingress to comingle a larger data set to expand the nature of what a package could provide “out of the box” may inflate operating costs of the platforms unnecessarily (this can definitely be the case with ERP platforms).

The overall assumption is that, rather than require custom enhancements of a base product, the goal from an architecture standpoint would be for the application to be able to consume and display information from an external AI service that is provided from your organization. This is available today within multiple ERP platforms, as an example.

The graphic below shows two different migration paths towards a future state where applications have both package and internally provided AI capabilities, one where the package provider moves first, internal capabilities are developed in parallel as a sidecar application, and then eventually fully integrated into the platform as a service, and the other way around, assuming the internal capability is developed first, run in parallel, then folded into the platform solution.

Custom-Developed Software (2)

In terms of custom software, the challenge is, first, evaluating whether there is value in introducing additional capabilities for the end user and, second, understanding the implications for trying to integrate the capabilities into the application itself versus leaving them separate.

In the event that there is uncertainty on the end user value of having the capability, implementing the insights as a side car / standalone application, then looking to integrate them within the application as an integrated capability a second step may be the best approach.

If a significant amount of redesign or modernization is required to directly integrate the capabilities, it may make sense to either evaluate market alternatives as a replacement to the internal application or to leave the insights separate entirely. Similar to purchased products, the insights should be delivered as a service and integrated into the application versus being built as an enhancement to provide greater flexibility for how they are leveraged and to simplify migrations to a different solution in the future.

The third scenario in the diagram above is meant to reflect a separate insights application that is then folded into the custom application as a service over time, so that it is a more seamless experience for the end user over time.

Either way, whether it be a purchased or custom-built solution, the important points are to decouple the insights from the applications to provide flexibility, but also to think about both providing a front-end for users to interact with the applications, but also to allow for a service-based approach as well, so that an agent acting on behalf of the user or the system itself could orchestrate various capabilities exposed from that application without the need for user intervention.

From Disconnected to Integrated Insights (3)

One of the reasons for separating out these various migration scenarios is to highlight the risk that introducing too many sidecar or special/single purpose applications could cause significant complexity if not managed and governed carefully. Insights should serve a process or need, and if the goal is to make a user more productive, effective, or safer, those capabilities should ultimately be used to create more intelligent applications that are easier to use. To that end, there likely would be value in working through a full product lifecycle when introducing new capabilities, to determine whether it is meant to be preserved, integrated with a core application (as a service), or tested and possibly decommissioned once a more integrated capability is available.

Summing Up

While the experience of a consumer of technology likely will change and (hopefully) become more intuitive and convenient with the introduction of AI and agents, the need to be thoughtful in how we develop an application architecture strategy, leverage components and services, and put the end user first will be priorities if we are going to obtain the value of these capabilities at an enterprise level. Intelligent applications is where we are headed and our ability to work with an integrated vision of the future will be critical to realizing the benefits available in that world.

The next article will focus on how we should think about the data and analytics environment in the future state.

Up Next: Deconstructing Data-Centricity

I hope the ideas were worth considering. Thanks for spending the time to read them. Feedback is welcome as always.

-CJG 07/30/2025

One thought on “The Intelligent Enterprise 2.0 – Evolving Applications”