Context

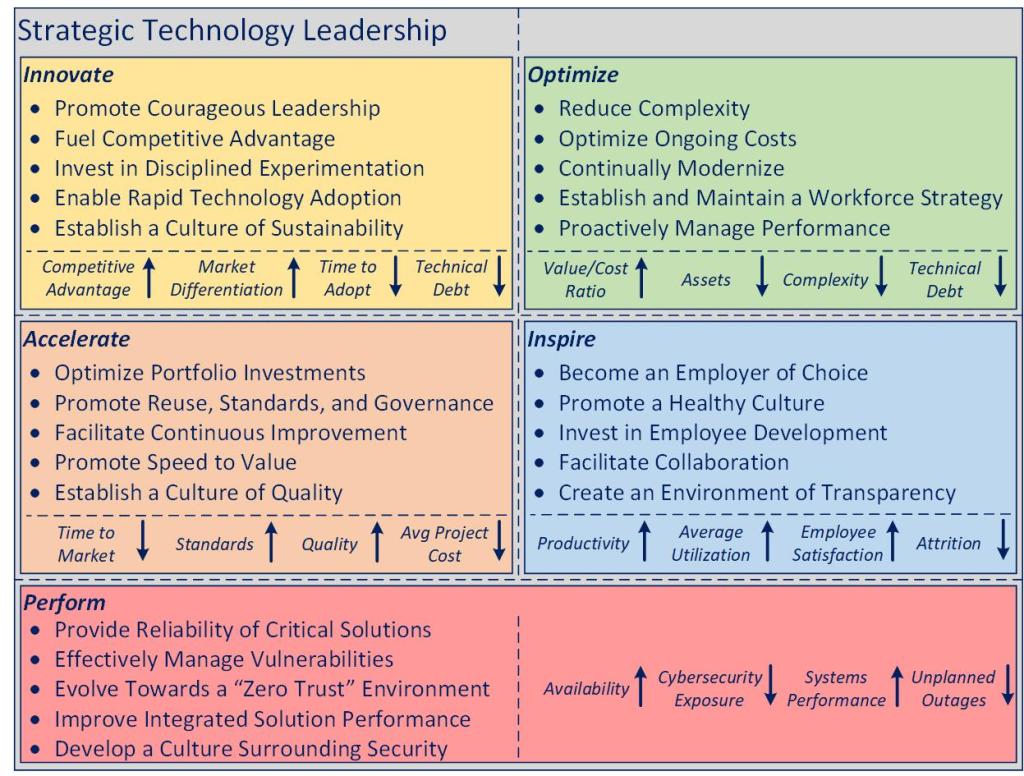

One of the things that I’ve come to appreciate over the course of time is the value of what I call “actionable strategy”. By this, I mean a blend of the conceptual and practical, a framework that can be used to set direction and organize execution without being too prescriptive, while still providing a vision and mental model for leadership and teams to understand and align on the things that matter.

Without a strategy, you can have an organization largely focused on execution, but that tends to create significant operating or technical debt and complexity over time, ultimately having an adverse impact on competitive advantage, slowing delivery, and driving significant operating cost. Similarly, a conceptual strategy that doesn’t provide enough structure to organize and facilitate execution tends to create little impact over time as teams don’t know how to apply it in a practical sense, or it can add significant overhead and cost in the administration required to map its strategic objectives to the actual work being done across the organization (given they aren’t aligned up front or at all). The root causes of these situations can vary, but the important point is to recognize the criticality of an actionable business-aligned technology strategy and its role in guiding execution (and thereby the value technology can create for an organization).

In reality, there are so many internal and external factors that can influence priorities in an organization over time, that one’s ability to provide continuity of direction with clear conceptual outcomes (while not being too hung up on specific “tasks”) can be important in both creating the conditions for transformation and sustainable change without having to “reset” that direction very often. This is the essence of why framework-centric thinking is so important in my mind. Sustainable change takes time, because it’s a mindset, a culture, and way of operating. If a strategy is well-conceived and directionally correct, the activities and priorities within that model may change, but the ability to continue to advance the organization’s goals and create value should still exist. Said differently: Strategies are difficult to establish and operationalize. The less you have to do a larger-scale reset of them, the better. It’s also far easier to adjust priorities and activities than higher-level strategies, given the time it takes (particularly in larger organizations) to establish awareness of a vision and strategy. This is especially true if the new direction represents a departure from what has been in place for some time.

To be clear, while there is a relationship between this topic and what I covered in my article on Excellence By Design, the focus there is more on the operation and execution of IT within an organization, not so much the vision and direction of what you’d ideally like to accomplish overall.

The rest of this article will focus on the various dimensions that I believe compromise a good strategy, how I think about them, and ways that they could create measurable impact. There is nothing particularly “IT-specific” about these categories (i.e., this is conceptually akin to ‘better, faster, cheaper’) and I would argue they could apply equally well to other areas of a business, but differ in how they translate on an operating level.

In relation to the Measures outlined in each of the sections below, a few notes for awareness:

- I listed several potential areas to consider and explore in each section, along with some questions that come to mind with each.

- The goal wasn’t to be exhaustive or suggest that I’d recommend tracking any or all of them on an “IT Scorecard”, rather to provide some food for thought

- My general point of view is that it’s better to track as little as possible from an “IT reporting” standpoint, unless there is intention to leverage those metrics to drive action and decisions. My experience with IT metrics historically is that they are overreported and underleveraged (and therefore not a good use of company time and resources). I touch on some of these concepts in the article On Project Health and Transparency

Innovate

What It Is and Why It Matters

Stealing from my article on Excellence By Design: “Relentless innovation is the notion that anything we are doing today may be irrelevant tomorrow, and therefore we should continuously improve and reinvent our capabilities to ones that create the most long-term value.”

Technology is evolving at a rate faster than most organizations’ ability to adopt or integrate those capabilities effectively. As a result, a company’s ability to leverage these advances becomes increasingly challenging over time, especially to the degree that the underlying environment isn’t architected in a manner to facilitate their integration and adoption.

The upshot of this is that the benefits to be achieved could be marginalized as any attempts to capitalize on these innovations will likely become point solutions or one-off efforts that don’t scale or create a different form of technical debt over time. This is very evident in areas like analytics where capabilities like GenAI and other artificial intelligence-oriented solutions are only as effective as the underlying architecture of the environment into which they are integrated. Are wins possible that could be material from a business standpoint? Absolutely yes. Will it be easy to scale them if you don’t invest in foundational things to enable that? Very likely not.

The positive side of this is that technology is in a much different place than it was ten or twenty years ago, where it can significantly improve or enhance a company’s capabilities or competitive position. Even in the most arcane of circumstances, there likely is an opportunity for technology to fuel change and growth in a digital business environment, whether that is internal to the operations of a company, or through its interactions with customers, suppliers, or partners (or some combination thereof).

Key Dimensions to Consider

Thinking about this area, a number of dimensions came to mind:

- Promoting Courageous Leadership

- This begins by acknowledging that leadership is critical to setting the stage for innovation over time

- There are countless examples of organizations that were market leaders who ultimately lost their competitive advantage due to complacency or an inability to see or respond to changing market conditions effectively

- Fueling Competitive Advantage

- This is about understanding how technology helps create competitive advantage for a company and focusing in on those areas rather than trying to do everything in an unstructured or broad-based way, which would likely diffuse focus, spread critical resources, and marginalize realized benefits over time

- Investing in Disciplined Experimentation

- This is about having a well-defined process to enable testing out new business and technology capabilities in a way that is purposeful and that creates longer-term benefits

- The process aspect of this important as it is relatively easy to spin up a lot of “innovation and improvement” efforts without taking the time to understand and evaluate the value and implications of those activities in advance. The problem of this being that you can either end up wasting money where the return on investment isn’t significant or that you can develop concepts that can’t easily be scaled to production-level solutions, which will limit their value in practice

- Enabling Rapid Technology Adoption

- This dimension is about understanding the role of architecture, standards, and governance in integrating and adopting new technical capabilities over time

- As an example, an organization with an established component (or micro-service) architecture and integration strategy should be able to test and adopt new technologies much faster than one without them. That isn’t to suggest it can’t be done, but rather that the cost and time to execute those objectives will increase as delivery becomes more of a brute force situation than one enabled by a well-architected environment

- Establishing a Culture of Sustainability

- Following onto the prior point, as new solutions are considered, tested, and adopted, product lifecycle considerations should come into play.

- Specifically, as part of the introduction of something new, is it possible to replace or retire something that currently exists?

- At some point, when new technologies and solutions are introduced in a relatively ungoverned manner, it will only be a matter of time before the cost and complexity of the technology footprint will choke an organization’s ability to continue to both leverage those investments and to introduce new capabilities rapidly.

Measuring Impact

Several ways to think about impact:

- Competitive Advantage

- What is a company’s absolute position relative to its competition in markets where they compete and on metrics relative to those markets?

- Market Differentiation

- Is innovation fueling new capabilities not offered by competitors?

- Is the capability gap widening or narrowing over time?

- I separated these first two points, though they are arguably flavors of the same thing, to emphasize the importance of looking at both capabilities and outcomes from a competitive standpoint. One can be doing very well from a competitive standpoint relative to a given market, but have competitors developing or extending their capabilities faster, in which case, there could be risk of the overall competitive position changing in time

- Reduced Time to Adopt New Solutions

- What is the average length of time between a major technology advancement (e.g., cloud computing, artificial intelligence) becoming available and an organization’s ability to perform meaningful experiments and/or deploy it in a production setting?

- What is the ratio of investment on infrastructure in relation to new technologies meant to leverage it over time?

- Reduced Technical Debt

- What percentage of experiments turn into production solutions?

- How easy is to scale those production solutions (vertically or horizontally) across an enterprise?

- Are new innovations enabling the elimination of other legacy solutions? Are they additive and complementary or redundant at some level?

Accelerate

What It Is and Why It Matters

“Take as much as time as you need, let’s make sure we do it right, no matter what.” This is a declaration that I don’t think I’ve ever heard in nearly thirty-two years in technology. Speed matters, “first mover advantage”, or any other label one could place upon the desire to produce value at a pace that is at or beyond an organization’s ability to integrate and assimilate all the changes.

That being said, the means to speed is not just a rush to iterative methodology. The number of times I’ve heard or seen “Agile Transformation” (normally followed by months of training people on concepts like “Scrum meetings”, “Sprints”, and “User Stories”) posed as a silver bullet to providing disproportionate delivery results goes beyond my ability to count and it’s unfortunate. Similarly, I’ve heard glorified versions of perpetual hackathons championed, where the delivery process involves cobbling together solutions in a “launch and learn” mindset that ultimately are poorly architected, can’t scale, aren’t repeatable, create massive amounts of technical debt, and never are remediated in production. These are cases where things done in the interest of “speed” actually destroy value over time.

That being said, moving from monolithic to iterative (or product-centric) approaches and DevSecOps is generally a good thing to do. Does this remedy issues in a business/IT relationship, solve for a lack of architecture, standards and governance, address an overall lack of portfolio-level prioritization, or a host of other issues that also affect operating performance and value creation over time? Absolutely not.

The dimensions discussed in this section are meant to highlight a few areas beyond methodology that I believe contribute to delivering value at speed, and ones that are often overlooked in the interest of a “quick fix” (which changing methodology generally isn’t).

Key Dimensions to Consider

Dimensions that are top of mind in relation to this area:

- Optimizing Portfolio Investments

- Accelerating delivery begins by first taking a look at the overall portfolio makeup and ensuring the level of ongoing delivery is appropriate to the capabilities of the organization. This includes utilization of critical knowledge resources (e.g., planning on a named resource versus an FTE-basis), leverage of an overall release strategy, alignment of variable capacity to the right efforts, etc.

- Said differently, when an organization tries to do too much, it tends to do a lot of things ineffectively, even under the best of circumstances. This does not help enhance speed to value at the overall level

- Promoting Reuse, Standards, and Governance

- This dimension is about recognizing the value that frameworks, standards and governance (along with architecture strategy) play in accelerating delivery over time, because they become assets and artifacts that can be leveraged on projects to reduce risk as well as effort

- Where these things don’t exist, there almost certainly will be an increase in project effort (and duration) and technical debt that ultimately will slow progress on developing and integrating new solutions into the landscape

- Facilitating Continuous Improvement

- This dimension is about establishing an environment where learning from mistakes is encouraged and leveraged proactively on an ongoing basis to improve the efficacy of estimation, planning, execution, and deployment of solutions

- It’s worth noting that this is as much an issue of culture as of process, because teams need to know that it is safe, expected, and appreciated to share learnings on delivery efforts if there is to be sustainable improvement over time

- Promoting Speed to Value

- This is about understanding the delivery process, exploring iterative approaches, ensuring scope is managed and prioritized to maximize impact, and so on

- I’ve written separately that methodology only provides a process, not necessarily a solution to underlying cultural or delivery issues that may exist. As such, it is part of what should be examined and understood in the interest of breaking down monolithic approaches and delivering value at a reasonable pace and frequency, but it is definitely not a silver bullet. They don’t, nor will they ever exist.

- Establishing a Culture of Quality

- In the proverbial “Good, Fast, or Cheap” triangle, the general assumption is that you can only choose two of the three as priorities and accept that the third will be compromised. Given that most organizations want results to be delivered quickly and don’t have unlimited financial resources, the implication is that quality will be the dimension that suffers.

- The irony of this premise is that, where quality is compromised repeatedly on projects, the general outcome is that technical debt will be increased, maintenance effort along with it, and future delivery efforts will be hampered as a consequence of those choices

- As a result, in any environment where speed is important, quality needs to be a significant focus so ongoing delivery can be focused as much as possible on developing new capabilities and not fixing things that were not delivered properly to begin with

Measuring Impact

Several ways to think about impact:

- Reduced Time to Market

- What is the average time from approval to delivery?

- What is the percentage of user stories/use cases delivered per sprint (in an iterative model)? What level of spillover/deferral is occurring on an ongoing basis (this can be an indicator of estimation, planning, or execution-related issues)?

- Are retrospectives part of the delivery process and valuable in terms of their learnings?

- Increase in Leverage of Standards

- Is there an architecture review process in place? Are standards documented, accessible, and in use? Are findings from reviews being implemented as an outcome of the governance process?

- What percentage of projects are establishing or leveraging reusable common components, services/APIs, etc.?

- Increased Quality

- Are defect injection rates trending in a positive direction?

- What level of severity 1/2 issues are uncovered post-production in relation to those discovered in testing pre-deployment (efficacy of testing)?

- Are criteria in place and leveraged for production deployment (whether leveraging CI/CD processes or otherwise)?

- Is production support effort for critical solutions decreasing over time (non-maintenance related)?

- Lower Average Project Cost

- Is the average labor cost/effort per delivery reducing on an ongoing basis?

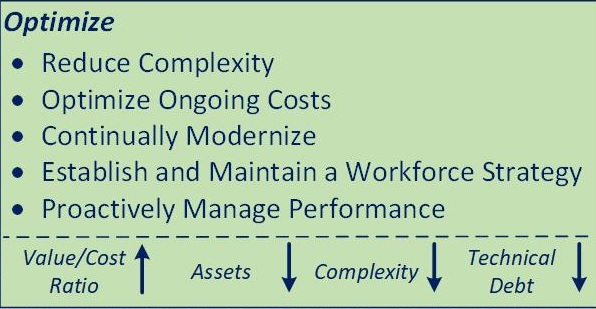

Optimize

What It Is and Why It Matters

Along with the pursuit of speed, it is equally important to pursue “simplicity” in today’s complex technology environment. With so many layers now being present, from hosted to cloud-based solutions, package and custom software, internal and externally integrated SaaS and PaaS solutions, digital equipment and devices, cyber security requirements, analytics solutions, and monitoring tools… complexity is everywhere. In large organizations, the complexity tends to be magnified for many reasons, which can create additional complexities in and across the technology footprint and organizations required to design, deliver, and support integrated solutions at scale.

My experience with optimization historically is that it tends to be too reactive of a process, and generally falls by the wayside when business conditions are favorable. The problem with this is the bloat and inefficiency that tends to be bred in a growth environment, that ultimately reduces the value created by IT with increasing levels of spend. That is why a purposeful approach that is part of a larger portfolio allocation strategy is important. Things like workforce and sourcing strategy, modernization, ongoing rationalization and simplification, standardization and continuous improvement are important to offset what otherwise could lead to a massive “correction” the minute conditions change. I would argue that, similar to performance improvement in software development, an organization should never be so cost inefficient that a massive correction is even possible. For that to be the case, something extremely disruptive should have occurred, otherwise the discipline in delivery and operations likely wasn’t where it needed to be leading up to that adjustment.

I’ve highlighted a few dimensions that are top of mind in regard to ongoing optimization, but have written an entire article on optimizing value over cost that is a more thorough exploration of this topic if this is of interest (Optimizing the Value of IT).

Key Dimensions to Consider

Dimensions that are top of mind in relation to this area:

- Reducing Complexity

- There is some very simple math related to complexity in an IT environment, which is that increasing complexity drives a (sometimes disproportionate) increase in cost and time to deliver solutions, especially where there is a lack of architecture standards and governance

- In areas like Integration and Analytics, this is particularly important, given they are both foundational and enable a significant amount of business capabilities when done well

- It is also important to clarify that reducing complexity doesn’t necessarily equate to reducing assets (applications, data solutions, technologies, devices, integration endpoints, etc.), because it could be the case that the number of desired capabilities in an organization requires an increasing number of solutions over time. That being said, with the right integration architecture and associated standards, as an example, the ability to integrate and rationalize solutions will be significantly easier and faster than without them (which is complexity of a different kind)

- Optimizing Ongoing Costs

- I recently wrote an article on Optimizing the Value of IT, so I won’t cover all that material again here

- The overall point is that there are many levers available to increase value while managing or reducing technology costs in an enterprise

- That being said, aggregate IT spend can and may increase over time, and be entirely appropriate depending on the circumstances, as long as the value delivered increases proportionately (or in excess of that amount)

- Continually Modernizing

- The mental model that I’ve had for support for a number of years is to liken it to city planning and urban renewal. Modernizing a footprint is never a one-time event, it needs to be a continuous process

- Where this tends to break down in many organizations is the “Keep the Lights On” concept, which suggests that maintenance spend should be minimized on an ongoing basis to allow the maximum amount of funding for discretionary efforts that advance new capabilities

- The problem with this logic is that it can tend to lead to neglect of core infrastructure and solutions that then become obsolete, unsupportable, pose security risks, and that approach end of life with only very expensive and disruptive paths to upgrade or modernize them

- It would be far easier to carve out a portion of the annual spend allocation for a thoughtful and continuous modernization where these become ongoing efforts, are less disruptive, and longer-term costs are managed more effectively at lower overall risk

- Establishing and Maintaining a Workforce Strategy

- I have an article in my backlog for this blog around workforce and sourcing strategy, having spent time developing both in the past, so I won’t elaborate too much on this right now other than to say it’s an important component in an organizational strategy for multiple reasons, the largest being that it enables you to flex delivery capability (up and down) to match demand while maintaining quality and a reasonable cost structure

- Proactively Managing Performance

- Unpopular though it is, my experience in many of the organizations in which I’ve worked over the years has been that performance management is handled on a reactive basis

- Particularly when an organization is in a period of growth, notwithstanding extreme situations, the tendency can be to add people and neglect the performance management process with an “all hands, on deck” mentality that ultimately has a negative impact on quality, productivity, morale, and other measures that matter

- This isn’t an argument for formula-driven processes, as I’ve worked in organizations that have forced performance curves against an employee population, and sometimes to significant, detrimental effect. My primary argument is that I’d rather have an environment with 2% involuntary annual attrition (conceptually), than one where it isn’t managed at all, market conditions change, and suddenly there is a push for a 10% reduction every three years, where competent “average” talent is caught in the crossfire. These over-corrections cause significant disruption, have material impact on employee loyalty, productivity, and morale, and generally (in my opinion) are the result of neglecting performance management on an ongoing basis

Measuring Impact

Several ways to think about impact:

- Increased Value/Cost Ratio

- Is the value delivered for IT-related effort increasing in relation to cost (whether the latter is increasing, decreasing, or remaining flat)?

- Reduced Overall Assets

- Have the number of duplicated/functionally equivalent/redundant assets (applications, technologies, data solutions, devices, etc.) reduced over time?

- Lower Complexity

- Is the percentage of effort on the average delivery project spent on addressing issues related to a lack of standards, unique technologies, redundant systems, etc. reducing over time?

- Lower Technical Debt

- What percentage of overall IT spend is committed to addressing quality, technology, end-of-life, or non-conformant solutions (to standards) in production on an ongoing basis?

Inspire

What It Is and Why It Matters

Having written my last article on culture, I’m not going to dive deeply into the topic, but I believe the subject of employee engagement and retention (“People are our greatest asset…”) is often spoken about, but not proportionately acted on in deliberate ways. It is far different, as an example, to tell employees their learning and development is important, but then either not provide the means for them to receive training and education or put “delivery” needs above that growth on an ongoing basis. It’s expedient on a short-term level, but the cost to an organization in loyalty, morale, and ultimately productivity (and results) is significant.

Inspiration matters. I fundamentally believe you achieve excellence as an organization by enrolling everyone possible in creating a differentiated and special workplace. Having worked in environments where there was a contagious enthusiasm in what we were doing and also in ones I’d consider relatively toxic and unhealthy, there’s no doubt on the impact it has on the investment people make in doing their best work.

Following onto this, I believe there is also a distinction to be drawn in engaging the “average” employees across the organization versus targeting the “top performers”. I have written about this previously, but top performers, while important to recognize and leverage effectively, don’t generally struggle with motivation (it’s part of what makes them top performers to begin with). The problem is that placing a disproportionate amount of management focus on this subset of the employee population can have a significant adverse impact, because the majority of an organization is not “top performers” and that’s completely fine. If the engagement, output, and productivity of the average employee is elevated even marginally, the net impact to organizational results should be fairly significant in most environments.

The dimensions below represent a few ways that I think about employee engagement and creating an inspired workplace.

Key Dimensions to Consider

Dimensions that are top of mind in relation to this area:

- Becoming an Employer of Choice

- Reputation matters. Very simple, but relevant point

- This becomes real in how employees are treated on a cultural and day-to-day level, compensated, and managed even in the situation where they exit the company (willingly or otherwise)

- Having worked for and with organizations that have had a “reputation” that is unflattering in certain ways, the thing I’ve come to be aware of over time is how important that quality is, not only when you work for a company, but the perception of it that then becomes attached to you afterwards

- Two very simple questions to employees that could serve as a litmus test in this regard:

- If you were looking for a job today, knowing what you know now, would you come work here again?

- How likely would you be to recommend this as a place to work to a friend?

- Promoting a Healthy Culture

- Following onto the previous point, I recently wrote about The Criticality of Culture, so I won’t delve into the mechanics of this beyond the fact that dedicated, talented employees are critical to every organization, of any size, and the way in which they are treated and the environment in which they work is crucial to optimizing the experience for them and the results that will be obtained for the organization as a whole

- Investing in Employee Development

- Having worked in organizations where there was both an explicit, dedicated commitment to ongoing education and development and others where there was “never time” to invest in or “delivery commitments” that interfered with people’s learning and growth, the consequent impact on productivity and organizational performance has always been fairly obvious and very negative from my perspective

- A healthy culture should create space for people to learn and grow their skills, particularly in technology, where the landscape is constantly changing and there is a substantial risk of skills becoming atrophied if not reinforced and evolved as things change.

- This isn’t an argument for random training, of course, as there should be applicability for the skills into which an organization invests on behalf of its employees, but it should be an ongoing priority as much as any delivery effort so you maintain your ability to integrate new technology capabilities as and when they become available over time

- Facilitating Collaboration

- This and the next dimension are both discussed in the above article on culture, but the overall point is that creating a productive workplace goes beyond the individual employee to encouraging collaboration and seeking the kind of results discussed in my article on The Power of N

- The secondary benefit from a collaborative environment is the sense of “connectedness” it creates across teams when it’s present, which would certainly help productivity and creativity/solutioning when part of a healthy, positive culture

- Creating an Environment of Transparency

- Understanding there are always certain things that require confidentiality or limited distribution (or both), the level of transparency in an environment helps create connection between the individual and the organization as well as helping to foster and engender trust

- Reinforcing the criticality of communication in creating an inspiring workplace is extremely obvious, but having seen situations where the opposite is in place, it’s worth noting regardless

Measuring Impact

Several ways to think about impact:

- Improved Productivity

- Is more output being produced on a per FTE basis over time?

- Are technologies like Copilot being leveraged effectively where appropriate?

- Improved Average Utilization

- Are utilization statistics reflecting healthy levels (i.e., not significantly over or under allocated) on an ongoing basis (assuming plan/actuals are reasonably reflected)?

- Improved Employee Satisfaction

- Are employee surveys trending in a positive direction in terms of job satisfaction?

- Lower Voluntary Attrition

- Are metrics declining in relation to voluntary attrition?

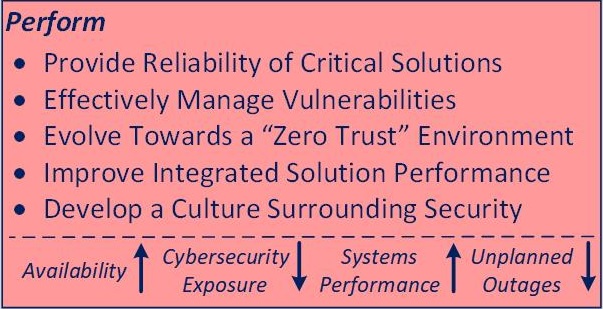

Perform

What It Is and Why It Matters

Very simply said: all the aspirations to innovate, grow, and develop capabilities don’t mean a lot if your production environment doesn’t support business and customer needs exceptionally well on a day-to-day basis.

As a former account executive and engagement manager in consulting at various organizations, any account strategy for me always began with one statement: “Deliver with quality”. If you don’t block and tackle well in your execution, the best vision and set of strategic goals will quickly be set aside until you do. This is fundamentally about managing infrastructure, availability, performance of critical solutions, and security. In all cases, it can be easy to operate in a reactive capacity and be very complacent about it, rather than looking for ways to improve, simplify, and drive greater stability, security, and performance over time.

As an example, I experienced a situation where an organization spent tens of millions of dollars annually on production support, planning for things that essentially hadn’t broken yet, but had no explicit plan or spend targeted at addressing the root cause of the issues themselves. Thankfully, we were able to reverse that situation, plan for some proactive efforts that ultimately took millions out of that spend by simply executing a couple projects. In that case, the issue was the mindset, assuming that we had to operate in a reactive rather than proactive way, while the effort and dollars being consumed could have been better applied developing new business capabilities rather than continuing to band-aid issues we’d never addressed.

Another situation that is fairly prevalent today is the role of FinOps in managing cloud costs. Without governance, the convenience of spinning up cloud assets and services can add considerable complexity, cost, and security exposure, all under the promise of shifting from a CapEx to OpEx environment. The reality is that the maturity and discipline required to manage it effectively requires focus so it doesn’t become problematic over time.

There are many ways to think about managing and optimizing production, but the dimensions that come to mind as worthy of some attention are expressed below.

Key Dimensions to Consider

Dimensions that are top of mind in relation to this area:

- Providing Reliability of Critical Solutions

- Having worked with a client where the health of critical production solutions was in a state where that became the top IT priority, this can’t be overlooked as a critical priority in any strategy

- It’s great to advance capabilities through ongoing delivery work, but if you can’t operate and support critical business needs on a daily level, it doesn’t matter

- Effectively Managing Vulnerabilities

- With the increase in complexity in managing technology environments today, internal and external to an organization, cyber exposure is growing at a rate faster than anyone can manage it fully

- To that end, having a comprehensive security strategy, from managing external to internal threats, ransomware, etc. (from the “outside-in”) is critical to ensuring ongoing operations with minimal risk

- Evolving Towards a “Zero Trust” Environment

- Similar to the previous point, while the definition of “zero trust” continues to evolve, managing a conceptual “least privilege” environment (from the “inside-out”) that protects critical assets, applications, and data is an imperative in today’s complex operating environment

- Improving Integrated Solution Performance

- Again, with the increasing complexity and distribution of solutions in a connected enterprise (including third party suppliers, partners, and customers), the end user experience of these solutions is an important consideration that will only increase in importance

- While there are various solutions for application performance monitoring (APM) on the market today, the need for integrated monitoring, analytics, and optimization tools will likely increase over time to help govern and manage critical solutions where performance characteristics matter

- Developing a Culture Surrounding Security

- Finally, in relation to managing an effective (physical and cyber) security posture, while a deliberate strategy for managing vulnerability and zero trust are the methods by which risk is managed and mitigated, equally there is a mindset that needs to be established and integrated into an organization for risk to be effectively managed

- This dimension is meant to recognize the need to provide adequate training, review key delivery processes (along with associated roles and responsibilities), and evaluate tools and safeguards to create an environment conducive to managing security overall

Measuring Impact

Several ways to think about impact:

- Increased Availability

- Is the reliability of critical production solutions improving over time and within SLAs?

- Lower Cybersecurity Exposure

- Is a thoughtful plan for managing cyber security in place, being executed, monitored, and managed on a continuous basis?

- Do disaster recovery and business continuity plans exist and are they being tested?

- Improved Systems Performance

- Are end user SLAs met for critical solutions on an ongoing basis?

- Lower Unplanned Outages

- Are unplanned outages or events declining over time?

Wrapping Up

Overall, the goal of this article was to share some concepts surrounding where I see the value of strategy for IT in enabling a business at an overall level. I didn’t delve into what the makeup of the underlying technology landscape is or should be (things I discuss in articles like The Intelligent Enterprise and Perspective on Impact Driven Analytics), because the point is to think about how to create momentum at an overall level in areas that matter… innovation, speed, value/cost, productivity, and performance/reliability.

Feedback is certainly welcome… I hope this was worth the time to read it.

-CJG 12/05/2023

4 thoughts on “Creating Value Through Strategy”