Setting the Stage

I’ve mentioned the concept of “framework-centric design” in a couple articles now, and the goal here is to provide some clarity with regard to where I believe technology and enterprise architecture strategy is heading. A challenge with trying to predict the future of this industry is the number of variables involved, including how rapidly various capabilities evolve, new technologies are introduced, skills of the “average developer” advance along with the tools enabling them to perform their work, methods of integrating and analyzing data arise, etc. I will make various assertions here. Undoubtedly some things will come to pass and others will not (and that’s ok), but the goal is to look forward and consider the possibilities in the interest of stirring discussion and exploration. Innovation is borne out of such ideas, and hopefully it will be worth the read.

That being said, I believe certain things will drive evolution in how digital business will operate:

- A more “open systems” approach will be driven by market demand that reduces proprietary approaches (e.g., on various SaaS and ERP platforms) that constrain or inhibit API-based interactions and open data exchange between applications

- Technologies will be introduced so rapidly that organizations will be forced to revisit integration in a way that enables accelerated adoption of new capabilities to remain competitive

- Application architecture will evolve to where connected ecosystems and strategic outcomes become the focus of design, not the individual components themselves.

- As an extension of the previous point, secure digital integration between an organization and its customers, partners, and suppliers will become more of a collaborative, fluid process than the transaction-centric model that is largely in place today (e.g., product design through fulfillment versus simple order placement).

- “Data-centric” thinking will be replaced by intelligent ecosystems and applications as the next logical step in its evolution. Data quality processes and infrastructure will be largely supplanted by AI/ML technology that interprets and resolves discrepancies and can autocorrect source data in upstream systems without user intervention, overcoming the behavioral obstacles that inhibit significant progress in this domain today.

- Cloud-enabled technology will reach edge computing environments to the degree that cloud native architecture becomes agnostic to the deployment environment and enables more homogenous design across ecosystems (said differently, we can design towards an “ideal state” without artificially constraining solutions to a legacy hosted/on-prem environment). Bandwidth will decide where workloads execute, not the physical environment.

Again, some of the above assertions may seem very obvious or likely where others are more speculative.

The point is to look at the cumulative effect of these changes on digital business and ask ourselves whether the investments we’re making today are taking us where we need to be, because the future will arrive before we expect or will be prepared for it.

The Emerging Ecosystem

In the world of application architecture, the shift towards microservices, SaaS platforms, and cloud native technologies (to name a few) seem to have pulled us away from a more strategic conceptualization of the role ecosystems play in the future environment.

Said simply: It’s not about the technology, it’s about the solution. Once you define the solution architecture, the technology part is solvable.

Too many discussions in my experience start with a package, or a technology, or an infrastructure concept without a fundamental understanding of the problem being solved in the first place, let alone a concept of what “good” would look like in addressing that problem at a logical (i.e., solution architecture) level.

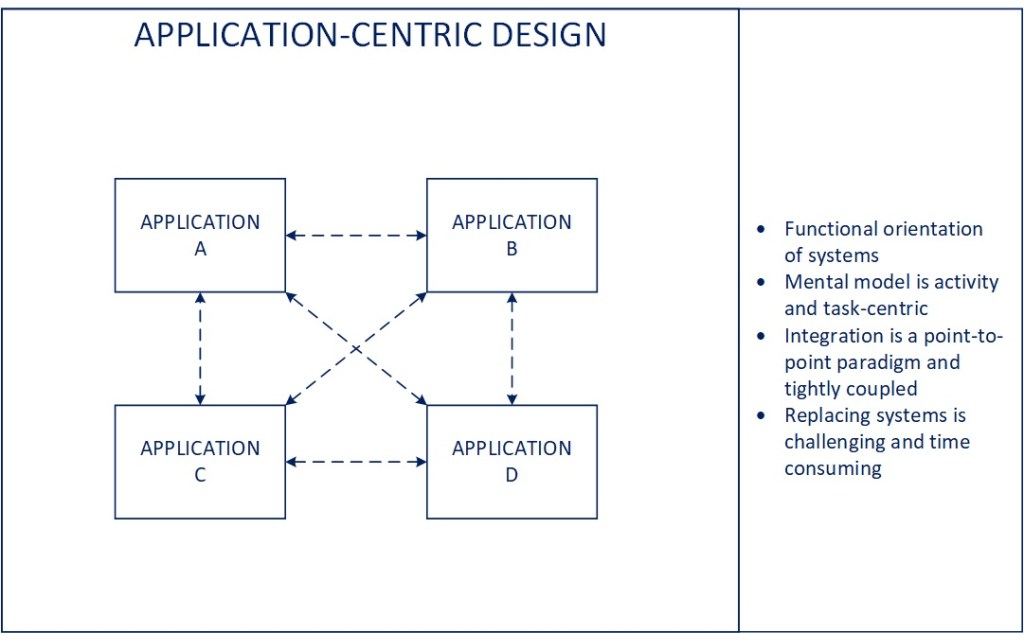

Looking back in time, we started with a simple premise of automating activities performed by “knowledge workers” to promote efficiency, consistency, collaboration, and so on, where the application was the logical center of the universe. In some respects, this can still be the case where SaaS platforms, ERP systems, and even some custom built (and much beloved) home grown solutions tend to become dominant players in the IT landscape. Those solutions then force a degree of adaptation of the systems around them to conform to their integration and data models, with constraints generally defined by the builders of that core system. In a relatively unstructured or legacy environment, that could lead to a significant amount of point-to-point integration, custom solutions, and ultimately technical debt. What seems like a good solution in these cases can eventually hinder evolution as those primary elements in the technology footprint effectively become a limiting constraint on advancing the overall capabilities of the ecosystems they inhabit.

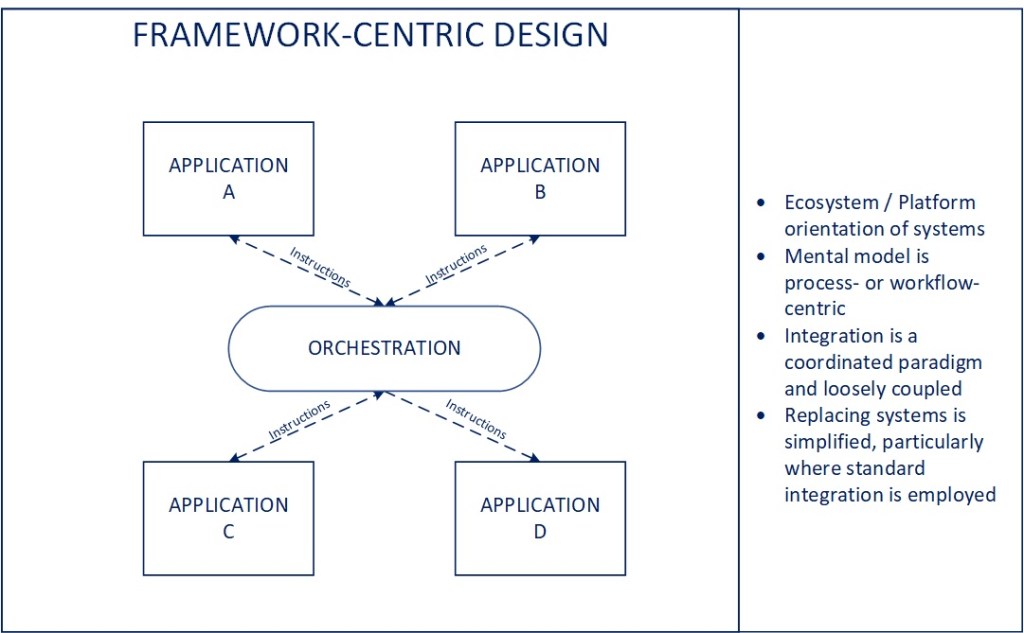

Moving one step in the right direction, we decouple systems and move towards a level of standard integration, whether through canonical objects or other means, that provides a means to replace systems more easily over time. In the case of a publish-and-subscribe based approach, there is also a way to support distributed transactions (e.g., address change in a system of record being promoted to multiple, dependent downstream applications) in a relatively linear sequential manner (synchronous or otherwise).

In a way, the “enterprise middleware” discussion has felt like a white whale of its own to me, having seen many attempts at it fail over years of delivery work, from the CORBA/DCOM days through many iterations of “EAI” technologies since. The two largest problems I’ve seen in this regard: scope and priorities. Defining the transactions/objects that matter, modeling them properly from a business standpoint, and enforcing standardization to a potentially significant number of new and legacy systems is a very difficult thing to do. I’ve seen “enterprise integration” over-scoped to the point it doesn’t create value (e.g., standards for standards sake), which then undermines the credibility of the work that actually could reduce complexity, promote resiliency and interoperability, and ultimately create speed to value in delivery. The other somewhat prevalent obstacle is the lack of leadership engagement on the value of standard integration itself, to the point that it is de-prioritized in the course of ongoing delivery work (e.g. date versus quality issue). In these situations, teams commit to “come back” and retrofit integrations to a strategic model, but ultimately never do, and the integrity of the overall integration architecture strategy falls apart for a lack of effective governance and adoption during execution.

Even with the best defined and executed integration strategy, my contention is that they fall short, because the endgame is not about modeling transactions, it’s about effective digital orchestration.

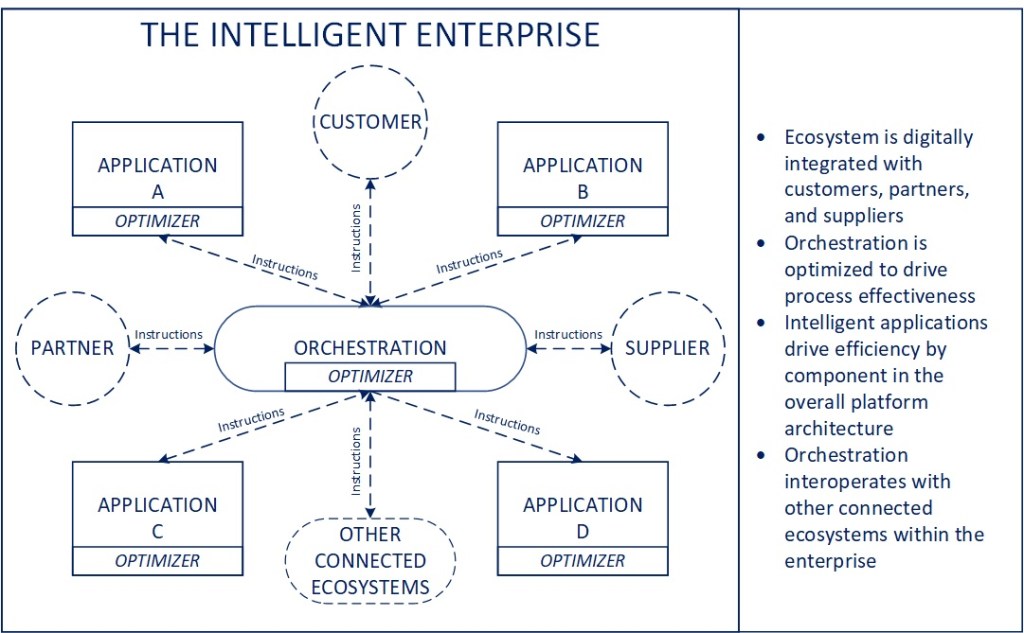

The shift in mental model is a pivot from application and transaction-centric thinking to an ecosystem-centric approach, where we look at the set of components in place as a whole and the ways in which they interoperate to bring about meaningful business outcomes. Effective digital orchestration is at the center of how the future ecosystems need to operate.

The goal in the future state is to think of the overall objective (e.g., demand generation for a sales ecosystem, production for manufacturing, distribution for transportation and logistics), then examine how each of the individual ecosystem components enable or support those overall goals, how they interoperate, and what the coordination of those elements needs to be across various use cases (standard and exception based) in an “ideal state” to optimize business outcomes. The applications move from the center of the model to components as endpoints and the process by which they interact is managed in the center through defined but highly configurable process orchestration. The configurability is a critical dimension because, as I will address in the data section below, the continuous optimization of that process through AI/ML is part of how the model will eventually change the way digital enterprises perform.

Said slightly differently, in an integration-centric model, transactions are initiated, either in a publish-and-subscribe or request-response manner, they execute, and complete. In an orchestration-centric model, the ecosystem itself is perpetually “running” and optimizing in the interest of generating its desired business outcomes. In the case of a sales ecosystem, as an example, monitoring associated with a lag in demand could automatically initiate lead generation processes that request actions executed through a CRM solution. In manufacturing, delays in a production line could automatically trigger actions in downstream processes or equipment to limit the impact of those disruptions on overall efficiency and output. The ecosystem becomes “self-aware” and therefore more effective in delivering on overall value than its individual components can on their own.

With a digitally connected ecosystem in place, the logical question becomes, “ok, what next?” Well, once you have discrete components that are integrated and orchestrated in a thoughtful manner that drives business outcomes, there are two ways to extend that value further:

- Optimize both the performance of individual components and the overall ecosystem through embedded AI/ML (something I’ll address in the next section)

- Connect that ecosystem internally to other intelligent ecosystems within the enterprise and use it as a foundation to drive digital integration with customers, partners, and suppliers

In the latter case, given the orchestration model itself is meant to be dynamic and configurable, an organization’s ability to drive digital collaboration with third parties (starting with customers) should be substantially improved beyond the transaction-centric models largely used today.

Moving Beyond “Data Centricity”

There is a lot of discussion related to data-centricity right now, largely under the premise that quality data can provide a foundation for meaningful insights that create business opportunity. The challenge that I see is in relation to how many organizations are modeling and executing strategies in relation to leveraging data more effectively.

The diagram above is meant to provide a simplistic representation of the logical model that is largely in place today to provide insights leveraging enterprise data. The overall assumption is that core systems should first publish their data to a centralized enterprise environment (data lake(s) in many instances). That data is then moved, transformed, enriched, etc. for the purpose of feeding downstream data solutions or analytical models that provide insights as to how to address whatever questions were originally the source of inquiry (e.g., customer churn, predictive maintenance).

The problem with the current model is that it is all reactive processing when the goal of our future state environment should be intelligent applications.

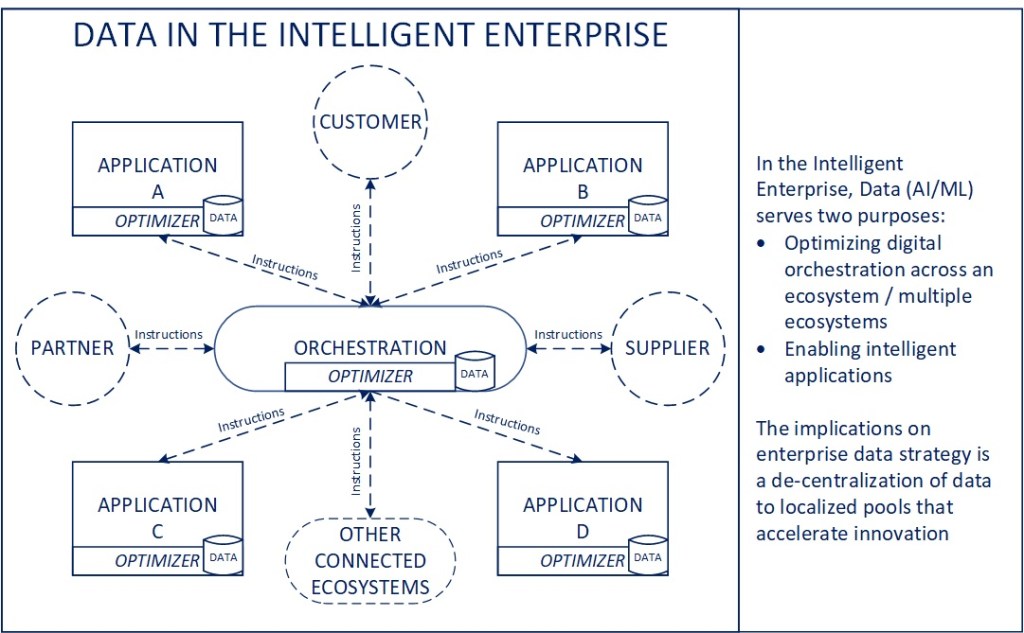

In the intelligent enterprise, AI/ML serves two purposes:

- Making the orchestration across a set of connected components more effective. This would be accomplished presumably by collecting and analyzing process performance data gathered as part of the ecosystem’s ongoing operation.

- Making applications more intelligent to the point that user intervention is greatly simplified, data quality is automatically enforced and improved, execution and performance of the component itself is continuously improved in line with its role in the larger ecosystem. Given there are not a significant number of “intelligent applications” today, it would seem as if the logical progression would be to first have a “configurable AI” capability that could integrate with existing applications, analyze their data and user interactions, and then make recommendations or simulate user actions in the interest of improving application performance. That capability would eventually be integrated within individual applications as a means to make it more attuned to individual application-oriented needs, user task flows, and data sets.

The implication on enterprise data strategy stemming from this shift in application architecture could be significant, as the goal of centralizing data for a larger “post-process” analytical effort would be moving upstream (at least in part) to the source applications, eliminating the need for a larger enterprise lake environment in deference to smaller data pools that facilitate change at a component/application level.

Wrapping Up

Bringing it all together, the purpose of this article was to share a concept of a connected future state technology environment where orchestration within and across ecosystems with embedded optimization could drive a significant amount of disruption in digital business.

As a conceptual model, there are undoubtedly gaps and unknowns to be considered, not the least of which is how to manage complexity in a distributed data strategy model and refactor existing transactional execution when it moves from being initiated by individual applications to a central orchestrator. Much to contemplate and consider, but fundamentally I believe this is where technology is going, and a substantial amount of digital capability will be unlocked in the process.

Feedback is welcome. The goal was to initiate discussion, not to suggest a well defined “answer”. Hopefully the concepts will stir questions and reaction.

-CJG 03/27/2022

8 thoughts on “The Intelligent Enterprise”